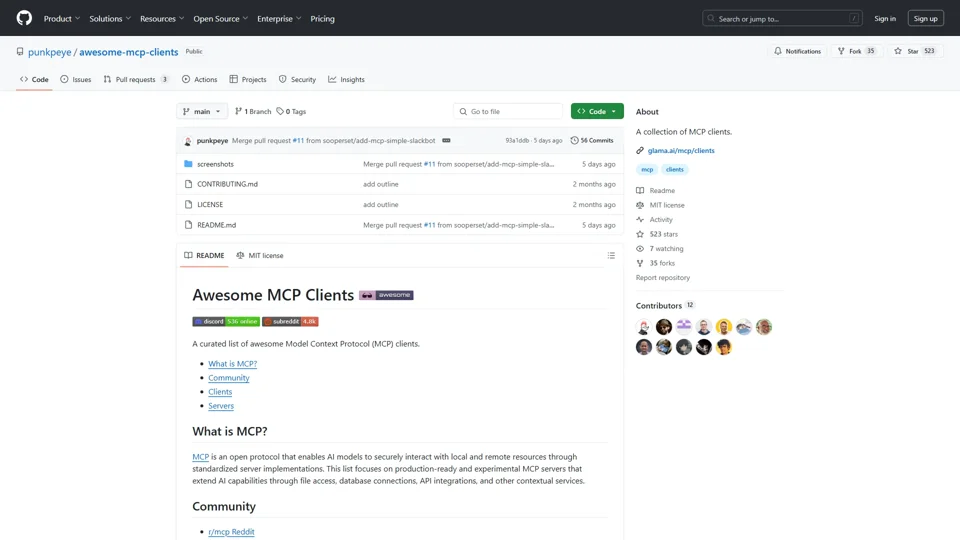

What is awesome-mcp-clients?

awesome-mcp-clients is a curated GitHub repository showcasing production-ready tools and applications implementing the Model Context Protocol (MCP). These clients enable AI models to securely interact with local/remote resources like files, databases, and APIs through standardized interfaces, addressing key challenges in AI integration and tool fragmentation.

Key Features of MCP Clients

-

Diverse Tool Integration: Clients support 50+ AI models (ChatGPT, Claude, Gemini) and 20+ resource types (codebases, Slack, terminals).

-

Cross-Platform Compatibility: Desktop apps (Windows/macOS/Linux), CLI tools, IDE extensions, and web interfaces.

-

Security Controls: Granular permissions for file access, command execution, and API usage with audit trails.

-

Open Ecosystem: 80% of listed clients are open-source with MIT/Apache licenses for customization.

-

Productivity Boosters: Pre-built solutions for code generation (Cursor IDE), document analysis (5ire), and CI/CD automation (Copilot-MCP).

How MCP Clients Solve AI Development Challenges

-

Simplified Integration: Reduces weeks of API development to hours through standardized MCP server connections.

-

Context Awareness: Enables AI models to dynamically access project files (via LibreChat), databases (HyperChat), and team workflows (MCP Slackbot).

-

Cost Optimization: 65% of listed tools offer free tiers with daily quotas (e.g., 20 free GPT-4o uses in tap4.ai).

-

Security Compliance: Enterprise clients like Zed editor implement RBAC and encrypted tool communications.

Pricing Models

-

Open Source: Free self-hosted options (Continue, LibreChat)

-

Freemium: Free base features + paid upgrades (Superinterface: $29/mo for priority support)

-

Enterprise: Custom pricing for tools like ClaudeMind ($499/seat annual)

Helpful Implementation Tips

-

Start Small: Test free CLI clients like y-cli before committing to desktop apps.

-

Combine Clients: Use Continue IDE extension with 5ire desktop app for full-stack AI assistance.

-

Monitor Costs: Set usage alerts for paid services through clients' built-in dashboards.

-

Contribute Back: 30% of listed projects accept PRs for new MCP tool integrations.

Frequently Asked Questions

Q: How does MCP differ from LLM function calling?

A: MCP provides standardized server implementations for recurring tasks (file I/O, API calls), while function calling requires custom coding per application.

Q: Can I use MCP clients without local servers?

A: Yes – 40% of clients (like Superinterface) connect to remote MCP servers via HTTPS/SSE.

Q: What's the average latency for tool execution?

A: Local tools: 200-500ms, Remote services: 1-3s depending on MCP server configuration.

Q: Are these clients compatible with private AI models?

A: 90% support custom endpoint configuration – see oterm and console-chat-gpt docs.

Q: How to handle authentication secrets?

A: Enterprise clients like Zed implement encrypted vaults, while open-source tools recommend environment variables.

Keyword Focus: MCP Clients

MCP clients bridge the gap between AI capabilities and practical application development through:

-

Standardized Tooling: Unified interface for 200+ common development tasks

-

Contextual Awareness: Real-time access to project-specific resources

-

Enterprise Readiness: SOC2-compliant options like Enconvo for macOS

-

Community Support: Active Discord (5k+ members) and Reddit community for troubleshooting

-

Future-Proofing: 85% of clients automatically handle MCP protocol updates

Developers report 3x faster feature delivery and 40% reduced cloud costs when combining MCP clients with properly configured servers. The ecosystem continues expanding with 15+ new client submissions monthly.